Introduction

In Part-II of our ongoing discussion, we will be focusing on how we accomplished the goal of performing sentiment analysis and spellcheck for text written in Hindi. We will also be introducing a novel “readability” analyzer, which predicts how hard a particular sentence is to read, for an average reader. Finally, as stated in Part-I of this series, we will be mentioning some open source libraries our NLP team is working on and is under rapid development.

Dataset

As with all things Deep Learning, our initial focus was on creating a large, context-relevant and diverse enough dataset. This is absolutely essential for Language Modelling (LM), wherein we train a model to understand the semantics, syntax, context, etc of the underlying language. We also created another tagged dataset for the classification task, i.e. sentiment and emotional analysis. The dataset for the LM task was scraped from a variety of books written in Hindi on a multitude of topics - history, politics, science, etc. The size of the dataset was ~ 9.7 GB. The dataset for the classification task was scraped from a number of sources including, but in no way limited to - movie reviews, news articles, twitter, product descriptions, etc. We finally tagged the ~ 60,000 data-points for both the sentiment and emotion.

Pre-processing

Once the datasets have been built, they now need to be “cleaned.” Apart from removing stop words and special characters, we also wanted to ensure that there were no transliterated text in the dataset. This was done to ensure that all the data-points had only Hindi content. On achieving that, we focused our attention on NER and POS Tagging. After a lot of trials and tribulations, we were finally able to manage satisfactory results using a modified FLAIR multi-lingual model. This little breakthrough would prove to be instrumental in tackling the spellcheck functionality, as we were now able to efficiently handle the class of nouns. Finally, we experimented with a number of tokenizers - Punkt, TreeBankWord, SpaCy, etc - to find the one most suitable to handle vernacular (i.e. indic) languages. We found SentencePiece to be the most suitable candidate. Now that we had these pieces of the puzzle in place, it was time to fire up those GPUs!

Language Modelling

Since there are no pre-trained Hindi language models available online with a “permissive” (i.e. MIT, Apache) license and which have been trained on a large enough dataset, we decided to train our own. After having weighed the pros and cons of various language modelling architectures - BERT, GPT-2, XL-Net - we decided to continue with GPT. Some of the features which went in its favor are: (i) ability of generate embeddings from the trained language model, which would be useful in classification tasks; and, (ii) amenability of the model to trimming / pruning, so as to reduce inference time. Now, training ∼ 9GB of data, even after parameter optimization, is very computationally intensive. But once we started testing the final trained model, the exercise proved to be well worth the time (and money) spent! The results generated showed a very intricate understanding of the syntax and semantics of the Hindi language, and seemed to lack any bias. The perplexity score of the model was 37. Certainly a lot of scope for improvement, but once we had our Language Model in place, it was time to deploy it for the classification task.

Classification

There are two classification tasks which are of utmost importance to us - (i) Sentiment Analysis, i.e. positive, negative and neutral; and, (ii) Emotional Analysis, i.e. joy, surprise, anger, fear, sad. Continuing our desire to ensure uniformity of models / architectures across the different modules, we went about the task of training the GPT model on the tagged dataset, for the purposes on sentiment analysis. As before, we used Stochastic Gradient Descent with Nestrov Momentum. To ensure faster convergence, we used the 1-cycle policy, complimenting it with the results of LR-Finder for the optimal learning rate. Our final model had an F1-score of 0.81 and the AUC score was 0.77. We are continuously striving to improve these metrics. And in-order to find the words which have a high correlation to a particular emotion, we experimented with the CAPTUM Library in PyTorch. That resulted in us being able to properly interpret our models, thereby ensuring a higher (self-) confidence in our results.

Readability

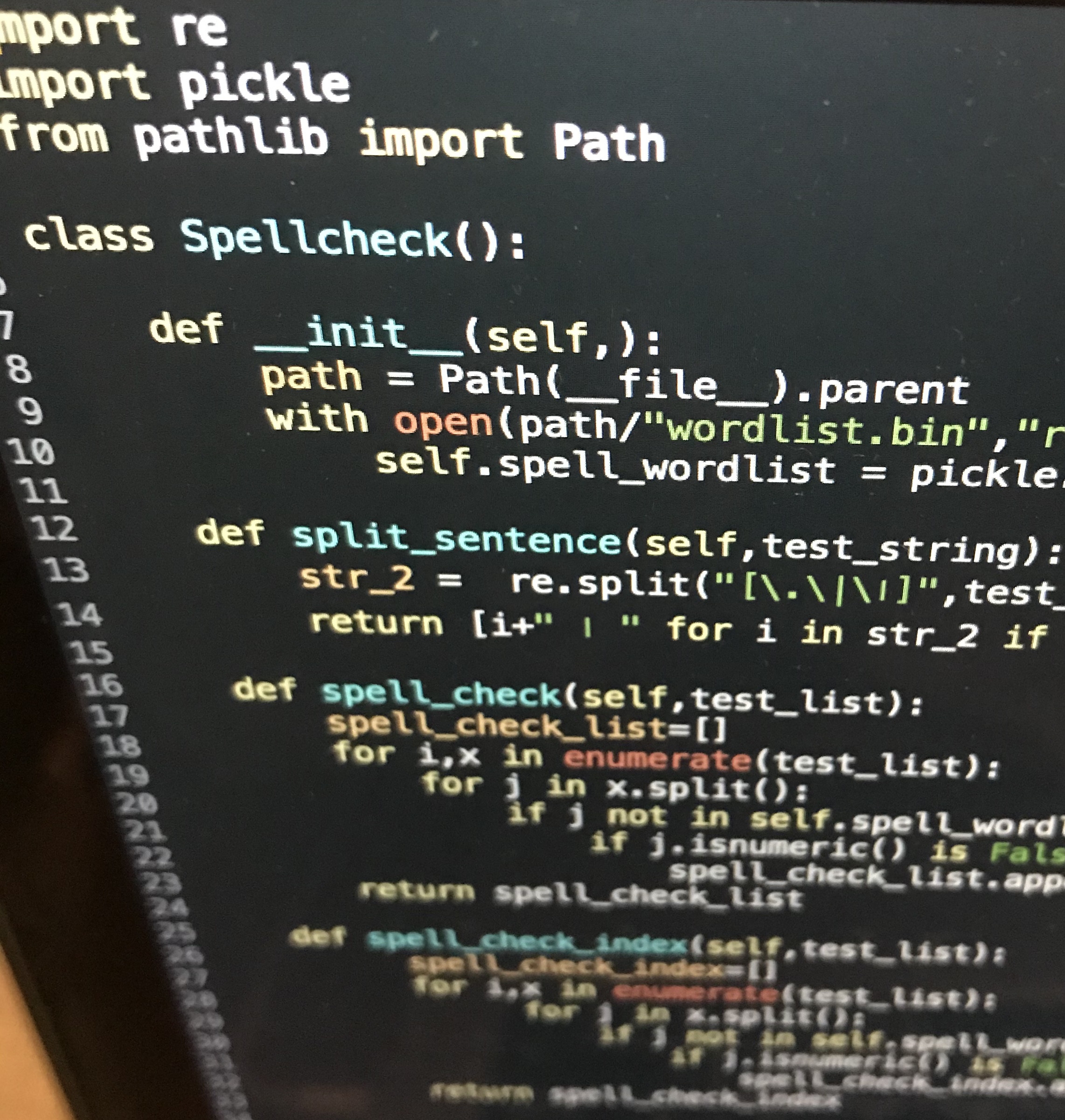

Now, for the exciting part; what we believe to be a marked contribution for the progress of vernacular NLP - the Readability Analyzer! Using TF-IDF on a down-sampled version of the large dataset, having a size of 500MB, we plotted a (word-) frequency graph. This helped us create a table of words linked to their general usage (i.e. how (in-) frequent its occurrence is over a large enough dataset). This was used as a metric, along with others, to determine how hard it is to read a particular sentence, thereby helping a writer gauge the reading level required to understand their content. Finally, the same sampled dataset was used to create a corpus of unique words, which would facilitate our implementation of the spellchecker. These words were stored in a highly optimized dictionary, to enable deployment in real-time scenarios. Also, we were able to provide recommendations for misspelt words owing to the embeddings generated in-house whilst conducting the GPT-based language modelling. Don’t you just love it when such disparate things just fall so beautifully in to place!

Conclusion

So, as can be seen, we have made a lot of progress with respect to objectively understanding the Hindi language. This is a very positive first step in building a comprehensive tool for vernacular languages. As for the next steps, our readers can expect two major updates in the short-term for our Hindi tool - (i) emotional analysis of content, along with highlighting of words contributing to a particular emotion; and, (ii) basic grammar check, then subsequently providing a subset of possible corrections. For the English tool, two major updates in the mid-term our readers can look forward to - (i) added automation to assist users make optimized decisions with regards to the recommendations; and, (ii) an in-house developed speech-to-text analyzer, for any audio format uploaded on our tool. We’re very excited for what the future holds.

In conclusion, I would like to mention GitHub Repos which our NLP Team makes use of while doing their tasks:

- DVC-Download: which helps practitioners keep a track of their datasets (and other large files) using DVC and S3 buckets.

- Classification-Report: which helps you visualize the models weights, biases, gradients, and losses, during the training process.

- Multi-label Classification: which is a compendium of notebooks that guides users on working with datasets with multiple tags.

Till our next blog, keep writing! And let the emotions flow.

Comments

No comments yet. Be the first to react!